COMP 310 |

Threads in Java |

|

|

A "thread" is a single line of code execution. A thread is not the same as the code itself. A thread is the execution of code. While most code that we write can be thought of as being in a single thread, a modern computer is capable of running hundereds of threads simultaneously. Multiple threads can be active and executing the same code at the same time! A very common mutli-threaded situation in a Java program is the thread that run from the orignal call to main() and the GUI event thread that runs the GUI components.

It is very easy to create ("spawn") a new thread in Java. A common technique is to override the run() method of the Thread class:

// Instantiate a thread object

Thread newThread = new Thread() {

public void run() {

// The code here will run under the new thread.

}

};

// Start the new thread running.

newThread.start(); // This runs the code in the run() method above.

An alternative way is to instantiate a Thread object with a Runnable object:

// Instantiate a thread object

Thread newThread = new Thread( new Runnable() {

public void run() {

// The code here will run under the new thread.

}

});

// Or, using a lambda expresssion:

Thread newThread = new Thread(() -> {

// The code here will run under the new thread.

});

// Start the new thread running.

newThread.start(); // This runs the code in the run() method above.

The second method above is useful when you want to work with the more lightweight Runnable implementaions than the entire Thread class. Runnable is essentially a no-parameter lambda function. Note that Thread implements Runnable .

When multiple threads access the same variable or fields, Java does NOT guarantee that all threads are actually accessing the same data in memory!

Java reserves the right to perform certain caching-type optimizations which could create copies of the data, so that different threads are actually accessing different copies of the data. Thus, if one thread updates the value of a variable, it cannot be guaranteed that another thread will see that updated value!

To force Java to not perform these optimizations and use a single, common memory location for a variable, mark the variable with the keyword volatile, e.g.

volatile Integer myValue;

It can be difficult to transfer data from one thread to another because the two threads are running independently from each other. The first thread doesn't know if or when the second thread is ready to accept the data and the second thread doesn't know if or when the first thread has the data ready for it to use. And then when you roll in the fact that several thread may be trying to read and/ore write the same peice of data simultaneously, the potential for incorrect or worse, corrupted data rises dramatically. The topic of how to safely and efficiently transfer data between threads is a deep, complex topic that is far beyond the scope of this course unfortunately. So, for our purposes, we will only discuss a few particular aspects of the overall subject that should cover most of our needs.

Basic Mantras

Minimize the number of threads that access any given piece of data. Use encapsulation to isolate the data accessed by different threads (different threads --> different objects). The reduces the "sphere of influence" of any problems that might arise.

Minimize the number of threads that attempt to mutate any given piece of data. This will minimize contentions and reduce the likelihood of invalid updates.

Minimize the effects of the particular value and timing associated with any data read by a thread. That is, the more the thread can deal with the data "as is", the better. Threads whose job it is to solely display the values without analysis are examples of this behavior. This helps decouple the threads from the data.

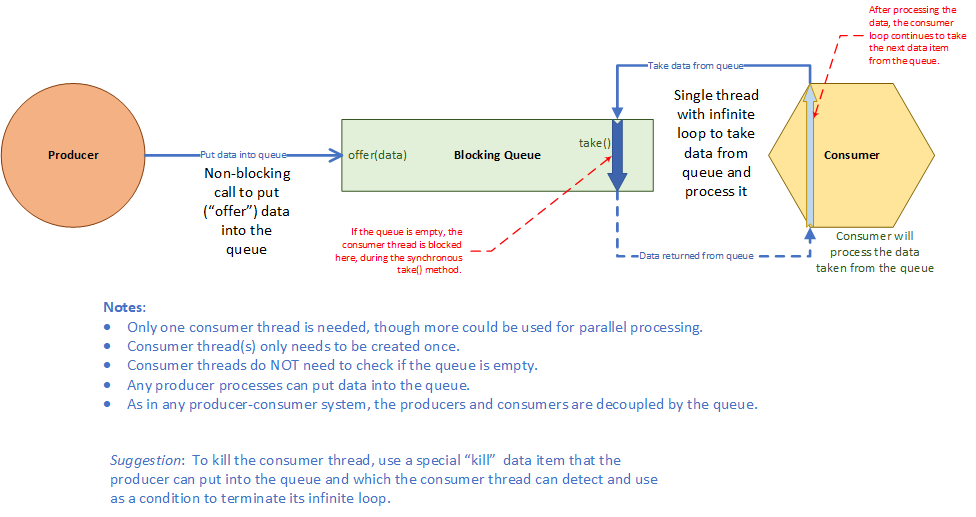

The producer-consumer model is the notion that one thread produces data that is used ("consumed") by another thread. Notice how this model has one thread whose job is solely to write the data and one thread whose job is solely to read the data. This is consistent with the basic mantras expressed above.

The problem is that the consumer doesn't know when the producer's data is ready. Likewise, the producer doesn't know if the consumer is ready to accept the data. Thus a producer-consumer implementation must satisfy two needs:

The data produced needs to be cached somewhere until the consumer is ready to use it.

The consumer thread must block until the data is ready for it.

Luckily, Java provides a pre-built data structures that solves the producer-consumer problem handily:

java.util.concurrent.BlockingQueue<E> -- the interface that defines a blocking queue that holds objects of type E. This defines a general, many-element queue.

java.util.concurrent.ArrayBlockingQueue<E> -- an implementation of BlockingQueue that uses an array of E as its back-end storage. We can define a queue of a particular fixed size with this implementation.

java.util.concurrent.LinkedBlockingQueue<E> -- an implementation of BlockingQueue that uses a linked list of E as its back-end storage. We can define a queue of a particular variable size with this implementation.

java.util.concurrent.SynchronousQueue<E> -- an implementation of BlockingQueue that is like a zero-length ArrayBlockingQueue. That is,a producer is blocked on offering a data element if there is no consumer actively waiting to remove it. Conversely, the producer will not block if there is a waiting consumer. Thus there are never data elements waiting in the queue. This type of blocking queue could be quite useful, especially if the consumers are always faster than the producers, but only if the producers can tolerate the possibility of being blocked.

The methods we will need are:

BlockingQueue<E>.offer(E x) -- puts the value x into the queue. Returns false if there is no room left in the queue.

E BlockingQueue<E>.take() -- returns the first value in the queue. Does not return until there is a value to return. Note that this method must surrounded by a try-catch block.

There are also other methods to do things such as block the producer thread if there is insufficient room in the queue for the next piece of data, but the use of these methods is less common.

A typical usage looks like this:

// shared by both threads BlockingQueue<E> bq = new LinkedBlockingQueue<>(); //producer thread code: ... // create data piece bq.offer(data); // the latest data is offered to the consumer. // continue for next data piece ... // consumer code: E data = bq.take(); // will block here until data is ready // process data and loop around to get the next one. ...

With this sort of implementation, the producer can create data as fast or slow as it wants and the consumer will always pick up that data, whether it becomes ready for that data before or after the producer offers it out.

A single BlockingQueue can be used to distribute data created from multiple producers to multiple consumers. This makes it a very useful concept in highly scalable multi-processing environments.

A simple example of using a 1-element blocking queue can be seen when considering obtaining input from the GUI from a non-GUI-event thread.

A very instructive pathological example of thread deadlocking can be encountered when attempting to access the GUI from an RMI processing thread.

Instead of creating a new thread for every received datapacket, blocking queues can be used to processing incoming data.

The advantages of this technique are

The disadvantages of this technique are

BlockingQueue<MyDataPacket<? extends IMessageType>> bq = new LinkedBlockingQueue<>(); // Queue holds type-narrowed datapackets holding type-narrowed messages // In some startup code, e.g. a start() method or constructor: { // Start the single data processing thread (new Thread(()->{ while(true) { // See discussion below if it is desirable to be able to stop this thread. MyDataPacket<? extends IMessageType> datapacket = bq.take(); // Thread blocks while no data available. datapacket.execute(visitor); // process the datapacket } })).start(); } /** * Receive and process a datapacket with minimal blocking of the caller. * @param datapacket The received datapacket */ public void receiveDatapacket(MyDataPacket<? extends IMessageType> datapacket) { bq.offer(datapacket); // Put the received data into the queue // bq.offer() doesn't block the thread, so this method returns immediately, releasing the caller. }A very similar technique can be used to send datapackets and thus not block internal threads. However, some caution should be exercised when sending to multiple receipients due to the probability of an errant remote receiver tying up the data sending thread.

WARNING: Thread and memory explosion potential with blocking queues

A hidden problem with blocking queues is that they contain a operating thread that Java does not allow to be externally terminated. Thread objects in Java do not have a method to "kill" the thread because of the potential deadlocking problems that it could cause if the thread had locks on any objects. This means that even if a blocking queue is no longer being used by anything, it won't get garbage collected and the thread won't automatically stop. This could potentially lead to memory leaks and an uncontrolled increase in the number of threads being used by the system if too many processing threads are created. A symptom of this issue is a marked increase in the memory and CPU usage by the application.

The thread used by the blocking queue must be explicitly stopped when the blocking queue is no longer being used. This can get a little tricky depending on the situation at hand, so one must be very careful.

Threads do have a interrupt() method which will

throw an

InterruptedException in the thread's execution code. This

exception can be caught and the thread can then exit. Unfortunately, Java security restrictions

only allow thread interruption under very particular situations that may not

always be available in an application. It is crucially

important to thoroughly test one's application under many conditions to make

sure that a thread interruption is actually allowed under ALL the situations

that your application will encounter!

Another drawback of interrupting the processing thread is that the thread could be in the middle of a legitimate processing an element taken from the queue. Killing the thread at this stage could have very damaging effects to the system so one has to be extremely careful when deliberately interrupting threads.

"Kill messages":

One technique to get around the Java thread security restrictions and uncontrolled interruption problem is to use a "kill message". This is a special data element that can be placed into the blocking queue. When the thread pulls an element out of the queue, it checks to see if the element is a kill message if it it is, the thread immediately exits.

When running multiple threads thre a number of interesting problems that can arise, which if not handled properly can lead to race conditions and other potentially harmful rammifications:

Most of the classes and interfaces that Java provideds to handle concurrent processes are in the java.util.concurrent package. To help understand the various concurrency capabilities of Java there is a nice must-read Java concurrency tutorial.

Here a few of classes and interfaces from the java.util.concurrent package that might be useful for you:

There are many more possibilities for concurrent processing which are best left to courses specifically geared towards them. So browse through the java.util.concurrent package and its sub-packages, java.util.concurrent.atomic and java.util.concurrent.locks, as well as the Java concurrency tutorial. Ask the course staff to help you with anything you don't understand but looks potentially useful to you!

For additional information see the How-To page: Synchronizing Threads that Share Data

© 2017 by Stephen Wong