Nonnegativity and Support Constraints

Recursive Image Filtering Algorithm

After working with the IBD algorithm for a while, we looked

into other options, including Simulated Annealing,

NAS-RIF, and higher order statistics methods. The

only algorithm that could be implemented in a relatively simple manner was

the NAS-RIF. The NAS-RIF was developed to deal with the problems that the

IBD method has with convergence and uniqueness[1]. It is not as

restrictive as to what form the PSF must take, but does require that is be

absolutely summable(BIBO). The NAS-RIF algorithm converges far better than

its IBD counterpart, but performance in a noisy environment leaves

something to be desired. NAS-RIf also has a big computational advantage

over the IBD. The time it takes to process an image is proportional to

square of the filter size, unlike the IBD where it is proportional to the

square of the image size.

Algorithm for blurring images with an asymmetric gaussian-based

randomly-altered psf. defocus.m Diagram and information reference:

The term that was used to change u looks something like this:

(where sgn is sign(x) +/- and the gamma term is used if the bgcol is

black, or 0)

Examples:

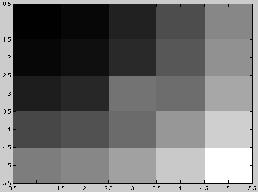

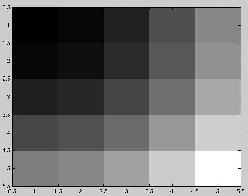

original, blurred image

original, blurred image

image with d=0.05 (< 5% change in u(k)-u(k-1)), d=0.01, d=0.001

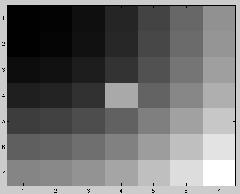

psf for (d=.05) 7x7 filter, 5x5 d=0.01, d=0.001(~500 iterations)

a fuzzy cat, a more evenly fuzzy cat.

psf estimate from algorithm of fuzzy cat

1.