Lab 7 - Performance Profiling

Lab goals:

- Understand how to use performance profiling tools and to interpret their results.

Performance Profiling

Often, you may want to understand more about the

performance of your program. Of course, when you are

developing your program, you should understand its

asymptotic big-O

complexity behavior. But frequently

you may want to know how your program actually performs on

real input data.

Modern computer architectures are sufficiently complicated (by features like pipelining and caching) that static analyses cannot accurately or precisely describe a program's running time. What we are left with are dynamic analyses, i.e., running or simulating programs and analyzing what actually happened.

Whole-Program Profiling

You might already have discovered the simplest possible Unix timing tool:

time

Running the shell command

time program arguments

will run the given program with those

arguments, and when the program completes, it will

then report timing statistics about this execution to the

standard error file (generally, the screen). Many shells

(e.g., bash, csh, and

tcsh) have a built-in time command,

and there is also a standard time as a

separate external program in

/usr/bin/time.

All versions of the time command report the

elapsed (wall clock

) time, as well as the

user

time and the system

time. The latter two

count CPU time spent on that program, broken down,

respectively, into time the computer was running the

program itself (the user time

) and time the computer

was running in various low-level functions (i.e., the

operating system) on behalf of that program (the system

time

). CPU time is when the computer is actually

computing for your program rather than running someone

else's program or simply sitting idle (e.g., while waiting

on you to type some input). Most versions of the

time command also report a few other

statistics, such as the amount of memory and the number of

file system I/O operations performed in the program's

execution.

In this lab, we will just be using the

time command built into the

shell.

More Detailed Profiling

Often, developers are interested in more detailed

performance profiling of programs than can be provided by

the time command, generally including some or

all of the following:

- the number of times each function is called,

- the time spent executing in each function, and

- the size of the heap over time.

How could you measure these? One way is that we could change the program's source code to track them:

- We could add a counter for each function. At the beginning of each function, we would increment the corresponding counter.

- At the beginning and end of each function, we could

get the current time (e.g., using

gettimeofday()) and compute the time consumed. In other words, add a stopwatch to each function. - And we could modify the memory allocator to periodically report the current memory usage.

Note that in each case, the addition of this profiling code will change the program's performance and thus the results, at least a little.

We could integrate the first two of these with the

printf-style of debugging advocated earlier in

the lab on debugging. However, more

simply, Unix has standard, classic tools for this type of

performance profiling:

gprofgcov

perf

Unfortunately, Unix has no standard tool for profiling memory (e.g., heap) usage, although several such debugging and profiling tools are described here.

The gprof Tool

gprof is a tool for analyzing a program's

execution time and breaking this time down among all the

various defined functions in the program. In essence, it is

a tool for (conceptually, at least) automatically adding a

stopwatch to each function. However, gprof

actually collects the data to show where the time is spent

in executing the program instead by periodically (at a very

high frequency) sampling where the program is currently

executing at each sample time. This statistical

sampling approach achieves essentially the same effect

(or very close to it) with much lower execution

overhead.

Using gprof is a three-step process:

-

Compile the program with the

-pgflag to the C compiler. This adds code into the resulting executable to do the profiling. -

Run the program:

./program arguments

The resulting profiling information is put in a

raw

form into the filegmon.outin the current directory. -

Run

gprofon the program, which interprets the information ingmon.outand prints a human-readable summary:gprofprogram > outputBy default, this produces a lot of output! For each function, it shows the percentage of time spent in that function and how that time is broken down into the functions it calls. If you link the program with the

-staticflag, the output includes the percentage of time spent in functions from the system libraries, too.To view all of this output generated by

gprof, the command line above redirects the standard output into a file, and you can then view the contents of that file, for example using the commandlessoutputThe program

lessdisplays a screenful of the file and then pauses. To see more, type the SPACE key; each time, this will show the next screenful. To exit thelessprogram, type theqkey.Various

gprofcommand-line options are also available to limit what output is generated bygprof.

For more details, use man gprof, or

look at the complete

gprof manual.

See also B&O Section 5.14.1.

The gcov Tool

The program gcov is similar to

gprof. But, rather than breaking down

the time spent in each function, it counts the number of

times each line of code is executed. It is

designed to help analyze testing coverage during

execution of a program (thus the name gcov),

for example finding statements in the source code that were

not executed and thus not tested during execution, but the

line execution counts are useful for simple performance

analysis as well.

Using gcov is a four-step process:

-

Compile each of the source files (e.g.,

source1.c,source2.c,source3.c, etc.) of the program with the-fprofile-arcs -ftest-coverageflags to the C compiler. This adds code into the resulting executable to do the profiling, and creates correspondingnotes

files (e.g.,source1.gcno,source2.gcno,source3.gcno, etc.) to record information for later correlating the performance data to specific points in the source files. The suffix

stands for.gcnogcovnotes. -

Link and run the program. The resulting profiling information is put in

raw

form into corresponding output files (e.g.,source1.gcda,source2.gcda,source3.gcda, etc.). The suffix

stands for.gcdagcovdata. -

Run

gcovon each of your sources files (e.g., run

,gcov source1.c

,gcov source2.c

, etc.) to format that raw profiling information into the human readable files corresponding to each of your source files (e.g.,gcov source3.csource1.c.gcov,source2.c.gcov,source3.c.gcov, etc.). -

Look at these resulting

*.gcovfiles.

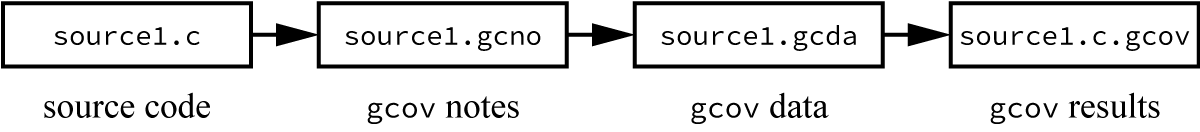

The figure below illustrates this multistage process for

using gcov and shows the resulting files for

each starting source code file (e.g.,

source1.c):

For more details, use man gcov, or

look at the complete

gcov manual.

The perf Tool

The Linux perf tool is a new addition to

the suite of Unix performance analysis tools. Like

gprof, perf examines the

call stack in acquiring the performance information from

your program, but unlike gprof,

perf samples only fixed-length stack

fragments. Consequently, programs with deep call stacks can

show some non-intuitive results with perf.

The perf tool has several advantages over

gprof:

perfdoes not require any additional compiler flags beyond-g.perfworks with any compiler.perfhas lower run-time overhead.

But the gprof tool still has several

advantages over perf:

gprofcan produce call counts, as well as raw time data.gprofproduces complete call graphs, not fragments of a call graph.- The

gprofuser interface is much simpler and much better documented.

Like gprof, using perf is a

three-step process:

-

Compile the program with the

-gflag to the C compiler. Only this flag is needed withperf, and this is the same flag used for other things such as for use withgdb. -

Then, run the program by invoking the recording phase of

perfas follows:perf record -e cycles:u --call-graph dwarf program arguments

where program is the program you are profiling, and arguments are the command-line arguments for this run of it. The data recording phase of

perfyields a raw data file named

.perf.dataIf you get error messages like

andlost 1 chunks!

, it generally means that the CLEAR machine you are currently logged in to is overloaded from something being run by one or more other users. If the number of lost chunks is small, you can basically ignore it for our purposes in this lab. You could also try waiting a while and trying it again, or you could log in to one of the other CLEAR machines.Check IO/CPU overload! -

To interpret the data, invoke

perfagain as follows:perf report --stdio --max-stack 6

You probably want to redirect the standard output of this into a file and then use something like

lessto examine the file.You should see something like the following:

# To display the perf.data header info, please use --header/--header-only options. # # # Total Lost Samples: 0 # # Samples: 9K of event 'cycles:u' # Event count (approx.): 8012828499 # # Children Self Command Shared Object Symbol # ........ ........ ............. ................ .......................... # 99.58% 28.11% sample-nonopt sample-nonopt [.] insert_list | --99.49%--insert_list | |--97.79%--insert_list | | | --97.59%--insert_list | | | |--81.58%--insert_list | | | | | |--66.74%--insert_list | | | | | | | |--27.86%--insert_list | | | | | | | |--15.99%--_int_free | | | | | | | |--15.13%--make_nonempty | | | | | | | |--5.25%--is_empty | | | | | | | --2.52%--__cfree (inlined) | | | | | --14.84%--make_nonempty | | malloc | | | --16.00%--make_nonempty | malloc | _int_malloc | |--0.97%--_int_free | --0.69%--make_nonempty 46.80% 15.86% sample-nonopt sample-nonopt [.] make_nonemptyetc. . . .

The interpretation of this data can be a little bit complicated. Here are some of the key points:

- The

number shows the percentage of total CPU time spent by the given function. The name of the function (when known) is shown on the far right asSelf

. Another way to think about theSymbolSelfnumber is that it reflects the fraction of samples that had the function at the bottom (most recent end) of the call stack. The sum of all of theSelfnumbers should be 100 percent. -

The

number is an attempt to measure the total fraction of CPU time that the function, plus everything that calls the function, spends. In particular, note thatChildrenperflooks up the call stack at the callers, not down the call stack at what is called.Also note that the

Childrennumber cannot be relied upon for programs with deep call stacks. Sinceperfdoes not collect arbitrarily deep, full call stacks, some top level functions may have highly inaccurateChildrennumbers. In particular, themainfunction may not have 100 percent as itsChildrennumber. That happens in this lab. - The

andCommand

reflect the command specifics, as well as the object file name and dynamically linked libraries.Shared Object -

The tree-like entries appearing after some symbol line indicate the callers of the function. It is important to keep in mind that

perflooks up the call stack at callers, not down the call stack at what it calls.The percentages shown on a call stack node indicate the fraction of time that had that function at that level of the call stack.

Notice that

insert_listis heavily recursive, so you should expect to find calls to that same function higher up on its call stack.

Using Profiling Information

Now that you have the information from one or more of the profiling methods above, what do you do with it?

- Debugging: Identify parts of the program using more or fewer resources than expected. These may correspond to bugs.

- Tuning: Identify the parts of the program using lots of resources. Try to optimize those parts of the program, since this is where there is the biggest room for improvement.

Within this course, a few lectures have described some optimization techniques, and more techniques will be described in later lectures. It will be helpful to use some of these techniques in the next assignment, where optimizing time and space resources is part of the assignment requirements.

See also B&O Section 5.14.2.

GitHub Repository for This Lab

To obtain your private repo for this lab, please point your browser to this link for the starter code for the lab. Follow the same steps as for previous labs and assignments to create your repository on GitHub and then to clone it onto CLEAR. The directory for your repository for this lab will be

lab-7-performance-profiling-name

where name is your GitHub userid.

Submission

Be sure to git push the appropriate C source files for this lab before 11:55 PM tonight, to get credit for this lab.