Comp360/560 Lab3 : Ray Tracing

First Milestone - April 02, 2019, 11:59pm

Final Submission - April 14, 2019, 6:00pm

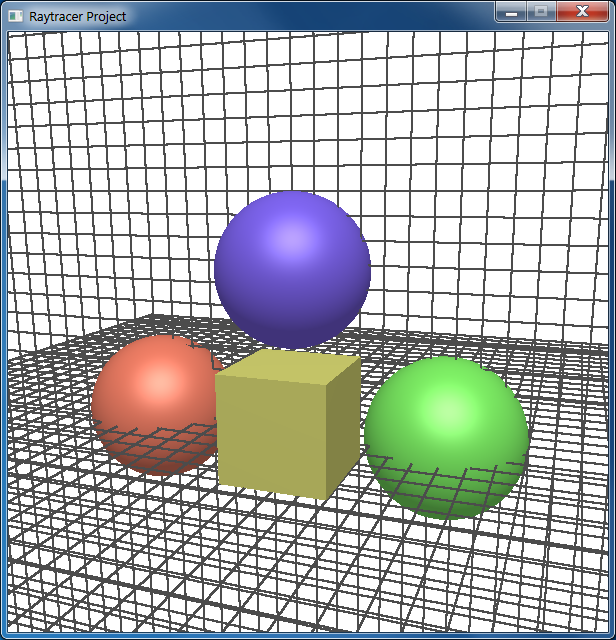

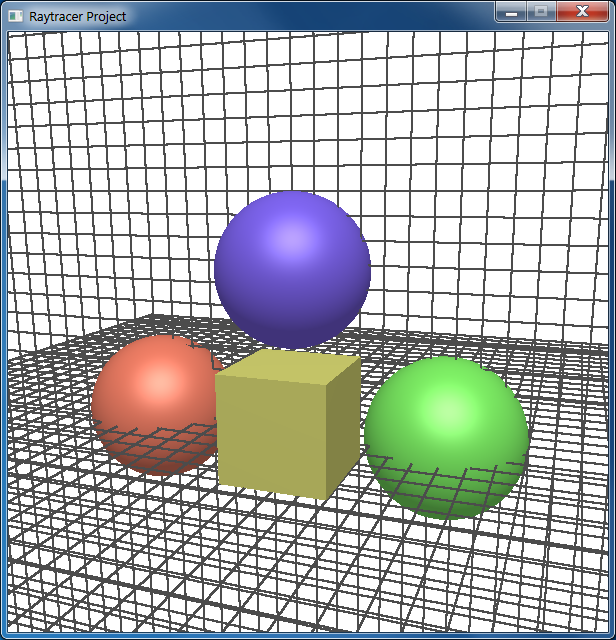

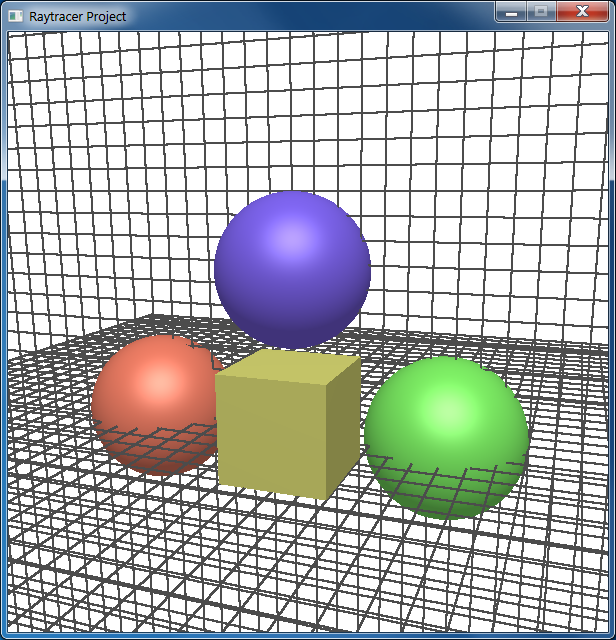

Figure 1: Modeling window

|

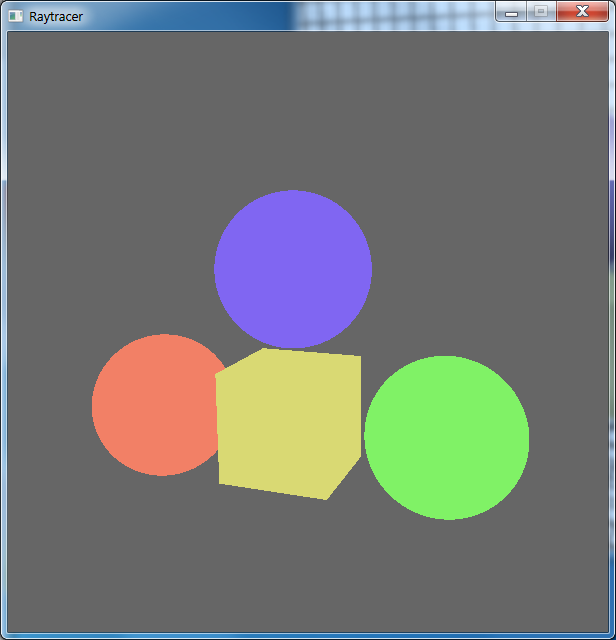

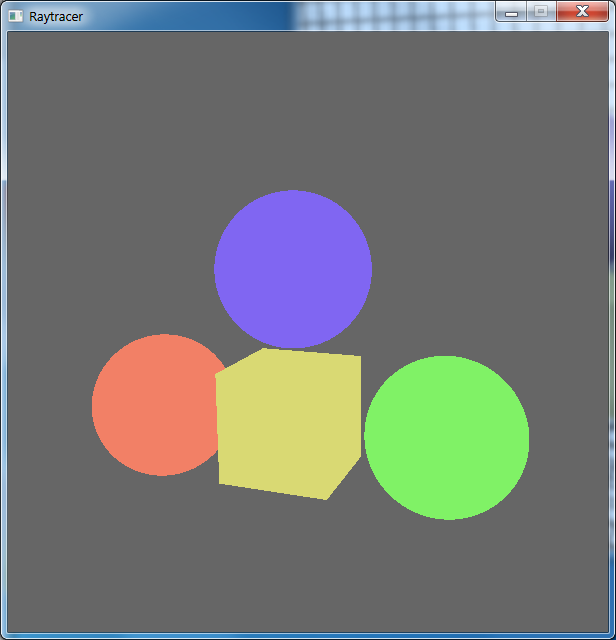

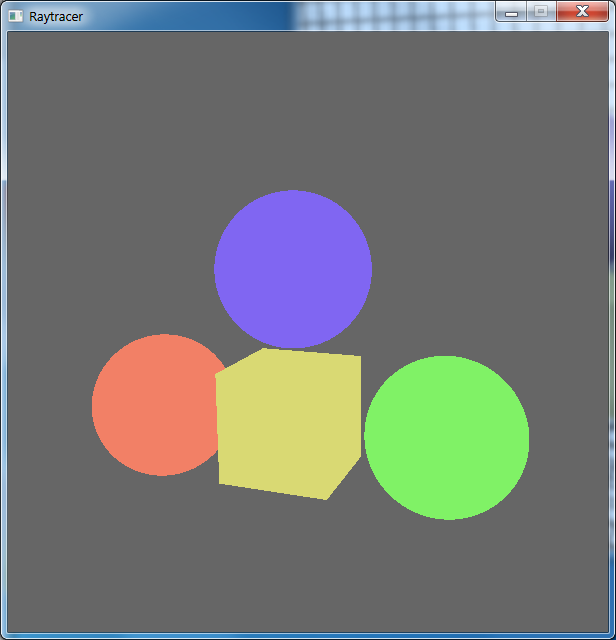

Figure 2: Ray-tracing window |

1 Overview

In this project you will implement a static ray tracer for scenes containing primitive 3-dimensional objects, including spheres, ellipsoids, boxes, cylinders, and cones. Your renderer will support multiple light sources, object shadows, reflection, refraction, and transparency. Additionally, you will create at least four interesting 3-dimensional scenes and render these scenes with your ray tracer.

This project will be completed in two parts. The first part will require you to ray-trace spheres and boxes. The second part will require you to ray-trace the rest of the geometric objects listed above and also create your own interesting scenes for ray tracing. This project is worth a total of 120 points (+20 extra points).

You may work with a partner on this lab.

Important: If you find any bug in the project that you think was already there in the starter code and for which you are not responsible, report the issue to the TA ASAP.

2 Project Overview

We will provide you with a framework upon which you can build your project (link to starter code). By default, the program will load in a scene file called "default.ray" and render a polygonal representation of the scene (Figure 1). You can modify this scene using controls as described in the "Program Controls" section. Once you are satisfied with the current scene, you can ray-trace the scene by pressing F5. Another window will pop-up and then progressively ray-trace the scene (Figure 2).

The basic framework contains only the ability to parse and ray-trace spheres and boxes without shading. You will need to:

- complete the sphere and box intersection code,

- fill out the rest of the code for ray-tracing other objects,

- write a few user-interface/application enhancements to the given framework.

2.1 First Milestone (40 points)

For the first milestone, your program must do the following:

- Implement the ray-sphere intersection code using the algorithm in the textbook. (6 points)

- Compute face normals at ray-box intersections (computing intersection points already done for you). (4 points)

- Implement the ray-tracing illumination model. (6 points)

- Implement shadow effects, reflection, refraction, and transparency. (20 points)

- Implement the ability to dynamically add spheres and boxes to the scene as well as remove them from the scene. (4 points)

- Ray-trace scenes containing any number of light sources, spheres, and boxes. The other object types are not required at this time.

For how to submit this part, see this section.

2.2 Final Submission (52 points)

By the project deadline, in addition to the requirements for the first milestone, your program must do the following:

- Render polygonal versions (using OpenGL) of ellipsoids, cylinders, and cones. (12 points)

- Ray-trace ellipsoids, cylinders, and cones. This requires you to compute ray-object intersections and normals at the intersections. (32 points)

- Implement the ability to dynamically add and remove any object from the scene. (4 points)

- Implement the ability to save the current scene into a file in the format as described in "Scene File Format". (4 points)

- Ray-trace scenes containing any number of light sources and objects (but not too many).

2.3 Scene Files/Pretty Pictures (28 points)

Your final submission should contain at least 4 interesting scene files of your own creation. Be creative. Impress us. Your files should demonstrate all the various ray-tracing effects and all the object types. For each scene file you create, please include a picture file in the bmp format that contains your renderer's output for that scene, as well as describe the scene in your README file.

2.4 Extra Credit (20 points)

For the ambitious, you are welcome to implement the following features:

- Ray-trace a torus (see textbook). (12 points)

- Add GUI for interactive adding/removing/manipulating light sources. (8 points)

3 Program Controls

Using the given framework will require familiarity with the following controls. You may add or change the controls as you wish, but document your changes in your README file.

3.1 Viewing Mode

Change the camera orientation and position:

- Rotate: Left-click and drag to rotate the camera.

- Zoom: Right-click and drag to zoom the camera in and out.

- Pan: Hold Alt, left-click, and drag to pan the camera.

- Pan (keyboard): Press keys w, a, s, or d to pan up, left, down, or right.

- Tilt (roll): Press keys q and e to tilt the camera to the left or right.

3.2 Selection Mode

Select an object in the scene for manipulation.

- Hold Ctrl to enter Selection Mode.

- Place mouse cursor on an object to outline the object in red. (You may have to move the cursor at least a little after pressing Ctrl.)

- Left-click on the object to select it, after which the property window will pop up. You are now in the "Editing Mode."

3.3 Editing Mode

Edit an object's definition and position.

- The selected object should be semi-transparent with a positioning operator (three orthogonal arrows) at the center of the object.

- Position:

- Place the mouse cursor on one of the three arrows to highlight the arrow in yellow.

- Left-click and drag while the arrow is selected to move the object along one of the three orthogonal axes.

- Rotation:

- Hold Shift while in "Editing Mode" to show the rotation operator.

- Place the mouse cursor on one of the three circles to highlight the circle in yellow.

- Left-click and drag while the circle is selected to rotate the object about one of the three orthogonal axes.

- Change the values in the property window to interactively update the scene.

- To exit "Editing Mode": hold Ctrl and left-click in an empty region to deselect the current object.

3.4 Application Controls

- Open a scene file: In the modeling window, press Ctrl + 'o'.

- Save the current scene file: In the modeling window, press Ctrl + 's'.

- Open the ray tracing window (ray-trace): In the modeling window, press F5.

- Close the ray tracing window: In the modeling window, press F4.

- Save the current ray-traced image: In the ray-tracing window, press Ctrl + 's'.

3.5 Object Adding/Removing

Although you are free to choose which keys are used to add or remove objects, here are some suggested controls (the structure already given in the starter code):

- To add an object, press 'n' followed by a number, or 'n' again to cancel object adding.

- To remove the selected object, press 'm'.

Make sure to document if you choose your own controls.

4 Suggested Plan

The following is a short outline that describes a suggested plan of attack and some hints. Plan well for completing this project.

4.1 First Milestone

- Rewrite the sphere intersection code using the algorithm in the textbook. Compute normals for both a sphere and a box. You will need to compute the intensity of the light at a point of intersection.

- Add rays that act as shadow rays to determine shadowing effects in your scene.

- Add reflective rays for mirror effects.

- Add refractive rays for transparency effects.

4.2 Final Submission

After the first milestone, you should have the majority of the functionality of the ray tracer. The only remaining tasks are computing normals to the other surfaces and computing intersection points between the other surfaces and rays. Pseudo-code for these computations is provided in the textbook.

4.3 Implementation Notes and Hints

Here are some notes on the specifics for implementing this lab. Please read the following sections before you start coding your solution.

4.3.1 General

- See the course textbook for the algorithms and calculations used in recursive ray tracing. For alternative presentations, you might also want to look at Foley and van Dam's book or on the web. You may use alternatives to help you understand ray tracing, but only the algorithm presented in the course textbook will be accepted. For example, to ray trace the ellipsoid, one approach is to solve the implicit equations of the ellipsoid and the ray. However, this approach is not what we are looking for.

- If we consider the presentation in the textbook, intersecting a ray with a basic geometric shape can be reduced to intersecting with some canonical shape. For example, intersecting a ray with an ellipsoid can be reduced to intersecting a ray with a unit sphere (centered at the origin). An ellipsoid can be viewed as the image of an affine transformation applied to the unit sphere. Therefore, if we apply the inverse transformation to the ray, compute the intersection between the transformed ray and the unit sphere, and forward-transform the intersection point, then we will have the intersection point on the ellipsoid. This approach should be the basis for all of your intersection code (please look at the box example in the given code). Here is a mathematica file that illustrates this intersection algorithm. Normals are handled similarly.

- Remember, when a ray intersects a reflective or refractive surface, rays are traced recursively from the intersection point.

- Shadow rays are NOT refracted. The contribution factor of a light source (along a particular shadow ray) is a real number within [0,1]. If a shadow ray hits an object with a positive transparency coefficient t, scale the factor by t and continue tracing the shadow ray.

- Your program may assume that input files are well-formed. Still, be careful when you modify the files directly.

- You should treat the transparency as a weight that you apply to your refracted ray in the same way you use the reflective coefficients to weigh the reflected ray.

- The general outline for the trace() function can be found here:

TraceResult trace(Ray r):

hit = check if any intersection point has be found

if hit:

// first add the ambient contribution, independent of the light sources

result = ambient_color * ambient_light

for each light source L:

shadow = compute the shadow factor (wrt L) as described on this project page

diffuse = compute the diffuse component wrt L

specular = compute the specular component wrt L

result += shadow * (diffuse*diffuse_coeff*light_color + specular*specular_coeff*light_color)

if reflection_coeff > 0:

reflect = compute color of the reflected ray as described in the book (call trace again)

if transparency > 0:

refract = compute color of the refracted ray as described in the book (call trace again)

result += reflection_coeff*reflect + transparency*refract

// you should cap each channel of your result to 1.

return result

return no hit

- You may assume that the refraction index of air is 1.

- Let L be the vector from the intersection point to the light source, and let N be the normal at the intersection point. You only want to add the specular and diffuse components if (L·N) > 0.

- The gray background color in the given code is only for demonstration. You should replace the grey background with a black background.

- To compute the normals for a deformed shape, you would need to multiply the undeformed normal by the inverse transpose of the deformation matrix. Please refer to the textbook for more details.

- A normal is a 3D vector so its fourth component is 0. One common mistake is that when the deformed normal is found, the fourth component is not 0, and when you try to normalize that vector, its magnitude is incorrect. Make sure when you are normalizing a vector in 3D, that the fourth component of the homogenization of the vector is 0.

- To debug or visualize normal vectors, you may use their coordinates as actual object colors. In this case, you may have to adjust value ranges from [-1,1] to [0,1]. Then the colors should vary smoothly on smooth surfaces and correspond to correct directions. For example, points on the surface facing the positive x-direction might appear red if we map the x-coordinate of the normal to red in RGB.

- Since rendering a complex scene can take several minutes, you are advised to ray-trace complex scenes in "Release Mode" rather than "Debug Mode." Also run the project by pressing Ctrl + F5 rather than F5. To demonstrate the difference, the TA's ray tracer takes 146s (2.5min) in "Release Mode" and 557s (9min) in "Debug Mode" to render one particular complex scene.

- Following the last point, try to focus on code speed besides code readability.

- Try not to interact with the running program while it is ray-tracing your scene.

- When you write code for ray-object intersection, use inverse deformation matrices that are already computed rather than inverting the matrices directly (see the box example).

- Look for "TODO" in code comments for where you will have to add more code. See also "NOTE."

- To remind the user, including the TA, print to the console messages about how to add or remove objects.

- Common and potential mistakes:

- forget to reset homogeneous coordinates correctly (1 for points, 0 for vectors, as mentioned above),

- normalize some vectors when you shouldn't (e.g., ray directions),

- forget to normalize vectors when you should (e.g., face normals),

- forget to update inverse transformation matrices (e.g., _imat),

- forget to update inner matrices with updateTransform(), or update at a wrong time,

- mark ray-tracing results as hit/miss incorrectly,

- recursively ray-trace at the same point (of the same object),

- use exact equality for floating-point numbers (precision errors).

4.3.2 Operator

-

An operator is the axis that appears at the center of a geometric object when you select the object. Its function is to allow direct translation and rotation in the modeling window. When you press shift while an object is selected, the axis will turn into three circles, which denote the ability to perform direct rotation.

Each operator has two operands: primary and secondary. Every time an operator is moved or rotated, the operands are notified that an action has been performed on the operator (see Operator.h). In the given code, the primary operand is just a geometric object (or Geometry, like a Sphere). The secondary operand is the property window. This means that every time an operator is translated or rotated, the associated Geometry and fields in the property window are also changed. These changes correspond to the rotate() and translate() functions in ShadeAndShapes.cpp and PropertyWindow.cpp. You have to fill out these functions for each of the remaining geometric object in ShadeAndShapes.cpp (the box and the sphere have been done for you).

-

Now we can consider adding or removing geometric objects from the scene. If you look at the function prepScene(), you can observe that one operator is created for each geometric object. Furthermore, there is a link from geometry to operator (the _geom2op map). If we are adding or removing objects, we need to update the mapping from geometry to operator. This update can be done just by calling prepScene() again, or you can write a more efficient version.

-

Finally, the following is something to watch out for when you are writing your code. Note that an operator only comes into play when a geometric object is selected. When an object is selected, we need to activate the operator in the current editing state. When an object is de-selected, we need to deactivate the operator in the current editing state.

When you are deleting an object that is selected (and in the editing mode), you will need to deactivate that operator. This deactivation code should be similar to, if not the same as, the code in the INPUT_SELECTING section of the mouseEvent() function in MainWindow.cpp.

4.3.3 Code Base

Here is a short description of the files you'll be working with. This project frequently uses the visitor pattern.

- ArcBall.h/cpp

- Camera manipulator.

- No need to change anything.

- Common.h

- Some code for common definitions. Utility functions.

- Geometry.h/cpp

- Defines the base class for the different shapes.

- Visitor for the different geometric shapes.

- Some geometry-utility functions defined in GeometryUtils.

- MainWindow.h/cpp

- Main window. Modeling window.

- Hooks operators to geometries.

- Contains logic for program controls like viewing, operators, and ray tracing.

Need to add dynamic add/delete feature.

Remember to connect operators to geometries when dynamically creating new geometries. Similarly, disconnect when deleting.

Probably need to heavily modify this to add other UI features.

- Matrix.h

- Operator.h

- Operator is the object that allows direct position/rotation manipulation in the modeling window. It shows up as three axes or three rings. You can use the operator to translate or rotate your geometry directly in the modeling window.

- Shouldn't need to add anything here.

- PropertyWindow.h/cpp

- Raytracer.h/cpp

- RaytracerViewer.h/cpp

- The viewing window for the ray tracing result.

- Shouldn't need to add anything here.

- Renderer.h

- Base class for zbuffer and ray tracing output.

- Scene.h/cpp

- ShadeAndShapes.h/cpp

- Contains definition for light and materials.

- Definitions for all the shapes

- Contains Intersector, which is a visitor for the shapes that performs intersection.

Need to fill out the member fields (axes, lengths) for each of the shapes.

Need to define how Operator functions affect each shape (look at Sphere and Box for reference).

Need to fill out the intersection code (visit()) for each of the shapes.

- ZBufferRenderer.h/cpp

5 What To Turn In

Use the given "lab_raytracer" directory. This directory should contain your source code and all files needed to build your program in Visual C++. After completing the project, you should have scene files (.ray) in files/ and rendered pictures (.bmp) in images/. The grader should be able to load your scene files and test them. Also include a README file containing:

- The names of you and your partner (or only your name if working alone),

- A brief overview of any major modification to the code,

- Information about your scene files,

- Any known bugs or incomplete features,

- Any other implementation details you think your grader should know.

Zip up this folder and follow the submission instructions on the course web page. Don't forget to remove unnecessary files before zipping the folder.

For the first milestone:

- The README needs only to describe how far you are in the project and does not need to be detailed and complete.

- You may include scene files that test the functionality of the parts you have completed.

- The submission will be checked for progress only and will not affect the final score. It is to keep you on track. However, if you have not completed this part by the first deadline, you may not have enough time for the rest of the project.

6 Scene File Format

A scene file is an ASCII text file containing data that define lighting and geometry for a ray-traced scene. A scene file is organized into three parts, lighting attributes, object definitions, and the camera definition, in this specific order.

In a scene file, values are separated by one or more blank spaces. Scene files support C++-style double-backslash (//) comments—the double backslash characters mean that input should be ignored until the next newline character. An example file appears at the end of this document. You may also take a look at the initial "default.ray" in the folder "files/".

6.1 Lighting Attributes

The lighting of the scene appears in the following sequence:

- The scene's ambient light color (three floating-point values).

- The number of point light sources in the scene - must be at least 1 (one integer).

- For each point light source:

- The light's position in space (three floating-point values).

- The light's color (three floating-point values).

6.2 Object Definitions

Beneath the lighting attributes, the input file will contain at least one object definition. Object definitions are given with the following sequence:

- The number of objects in the scene—must be at least 1 (one integer).

- For each object:

- The object's type, one of the strings "sphere", "ellipsoid", "box", "cone", or "cylinder" (or "torus").

- A set of numbers defining the object's geometry—format varies, see below.

- A set of numbers defining the object's material properties. This format is the same for all objects.

The object definition formats are as follows:

Sphere

A sphere is defined by the following values:

- The coordinates of the center of the sphere (three floating-point values).

- Radius of the sphere (one floating-point value).

Ellipsoid

An ellipsoid is defined by the following values:

- The coordinates of the center of the ellipsoid (three floating-point values).

- Unit vector in the direction of the ellipsoid's first axis, v1 (three floating-point values).

- Unit vector in the direction of the ellipsoid's second axis, v2 (three floating-point values).

- Unit vector in the direction of the ellipsoid's third axis, v3 (three floating-point values).

- Length of ellipsoid along v1 (one floating-point value).

- Length of ellipsoid along v2 (one floating-point value).

- Length of ellipsoid along v3 (one floating-point value).

Note that if all axis lengths are equal, the result is a sphere.

Box

A box is defined by the following values:

- The coordinates of a corner of the box (three floating-point values).

- Unit vector in the direction of the box's length, vl (three floating-point values).

- Unit vector in the direction of the box's width, vw (three floating-point values).

- Unit vector in the direction of the box's height, vh (three floating-point values).

- Length of box (one floating-point value).

- Width of box (one floating-point value).

- Height of box (one floating-point value).

The box should be drawn on the positive side of its corner as measured by the coordinate system given by the vectors vl, vw, and vh. Note that if the three vectors are orthonormal and length = width = height, then the result is a cube.

Cylinder

An elliptical cylinder is defined by the following values:

- The coordinates of the center of the base of the cylinder (three floating-point values).

- Unit vector along the first axis of the ellipse that forms the cylinder's base (three floating-point values).

- Unit vector along the second axis of this ellipse (three floating-point values).

- Unit vector in the direction of the cylinder's central axis (three floating-point values).

- Length of the first axis of the cylinder's elliptical base (one floating-point value).

- Length of the second axis of the cylinder's elliptical base (one floating-point value).

- Height of the cylinder along its axis (one floating-point value).

Cone

A cone is defined by the following values:

- The coordinates of the center of the base of the cone (three floating-point values).

- Unit vector along the first axis of the ellipse that forms the cone's base (three floating-point values).

- Unit vector along the second axis of this ellipse (three floating-point values).

- Unit vector in the direction of the cone's central axis (three floating-point values).

- Length of the first axis of the cone's elliptical base (one floating-point value).

- Length of the second axis of the cone's elliptical base (one floating-point value).

- Height of the cone along the axis, i.e., the distance from the center of the cone's base to the vertex of the cone (one floating-point value).

Material Properties

Immediately after the definition of an object's geometry, its material properties are given as follows:

- Ambient coefficients (three floating-point values between 0 and 1).

- Diffuse coefficients (three floating-point values between 0 and 1).

- Specular coefficients (three floating-point values between 0 and 1).

- Specular exponent (one floating-point value).

- Reflective coefficients (one floating-point value between 0 and 1).

- Transparency coefficients (one floating-point value between 0 and 1).

- Index of refraction (one floating-point value not less than 1).

6.3 Camera definition

After the object definitions, the next line contains 19 floating-point values. These values describe the orientation and the position of the camera. Having this description allows you to save the camera description along with your object and lighting descriptions.

6.4 Sample File

// Lighting

0.3 0.3 0.3 // R, G, B of ambient color

1 // Number of light sources

0 5 5 // x, y, z position of the first light source

1 1 1 // Color of the first light source

// More lights

// Objects

2 // Number of objects

// First object

sphere // Type

0 0 0 // x, y, z coordinates of center of the sphere

1.5 // radius of the sphere

0.1 0.1 0.1 // Ambient coefficient

0.45 0.45 0.45 // Diffuse coefficient

0.5 0.5 0.5 // Specular coefficient

5 // Specular exponent

0.5 // Reflection coefficient

0.1 // Transparency coefficient

1.6 // Index of refraction

// Second object

box // Type

0 0 0 // x, y, z coordinates of corner of box

1 0 0 // Normalized vector of the direction of Length (vl)

0 1 0 // Normalized vector of the direction of Width (vw).

0 0 1 // Normalized vector of the direction of Height (vh).

.5 .5 1 // The Length, Width, and Height of the box, in floats.

0.1 0.1 0.1 // Ambient coefficient

0.45 0.45 0.45 // Diffuse coefficient

0.5 0.5 0.5 // Specular coefficient

5 // Specular exponent

0.5 // Reflection coefficient

0.1 // Transparency coefficient

1.1 // Index of refraction

1 0 0 0 0 1 0 0 0 0 1 0 0 0 0 1 0 0 2 // The camera description